World Models

Usually in RL due to credit assignment problems [difficult to train large models with large number of parameters], mostly small NNs with less parameters are used. In this paper, they train large RNN based agents. Basically, the agent is divided into a large world model (learns unsupervised representation of agent environment) and a small controller model(learns a highly compact policy to perform tasks, focuses on credit assignment problems on a small search space).

Note: Credit assignment problem in rl is when feedback is given at the end of the episode, it becomes difficult to figure which steps should receive credit or blame for the final result.

Advantage of learning a world model is that we can make agents learn in the simulated environment generated by the world model itself and develop a policy which can later be used in the actual environment.

One problem is agent’s access to the entire environment (hidden states of the game engine not just some observation data) allows agents to potentially exploit some imperfections of the environment (can easily find adversarial policy to fool the model). To prevent this we can train the controller inside a noisier generated environment (controlled by temperature factor). Also, since our world model is an approximate estimation of the real environment(for e.g., even the number of monsters generated in vizdoom env is not exact, it will be exploitable by our agent (controller model).

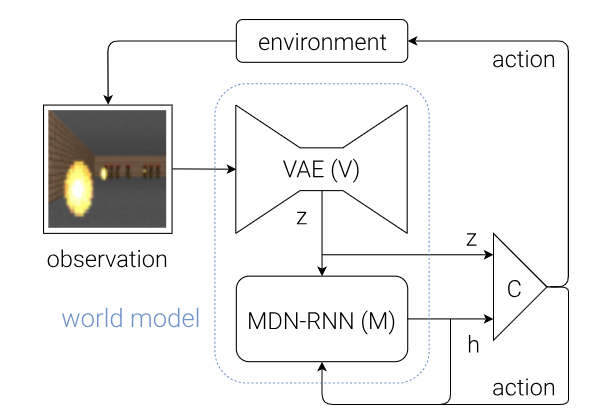

Three parts

- VAE as a Vision model to encode a high dimensional 2D image at a time frame into a low dimensional latent vector[spatial compression].

- RNN model to make future prediction by compressing image data frames over time [temporal compression]. RNN needs to output stochastic prediction (in the form probability distribution p(z) instead of z) as complex environments are stochastic in nature. It is followed by a mixture density network (MDN) to estimate p(z) as a mixture of gaussian distributions.

- Finally, the controller model is responsible for deciding the action, it is a small, linear model trained with the Covariance-Matrix Adaptation Evolution Strategy.